Setting Up ELK To Centralize & Visualize Logs

Lets setup the below mentioned key components one by one:

- Logstash: The server component of Logstash that processes incoming logs

- Elasticsearch: Stores all of the logs

- Kibana: Web interface for searching and visualizing logs

- Logstash Forwarders: Forward logs from client to main logstach servers, just like syslogng.

Prerequisites:

- OS: Fedora 21

- RAM: 2GB

- CPU: 2

- JAVA 7 or latest

Install Elasticsearch

Run the following command to import the Elasticsearch public GPG key into rpm:

sudo rpm --import http://packages.elasticsearch.org/GPG-KEY-elasticsearchCreate and edit a new yum repository file for Elasticsearch:

[root@base ~]# cat /etc/yum.repos.d/elasticsearch.repo [elasticsearch-1.4] name=Elasticsearch repository for 1.4.x packages baseurl=http://packages.elasticsearch.org/elasticsearch/1.4/centos gpgcheck=1 gpgkey=http://packages.elasticsearch.org/GPG-KEY-elasticsearch enabled=1 [root@base ~]#

yum -y install elasticsearch-1.4.4-1.noarchElasticsearch is now installed. Let’s edit the configuration:

vi /etc/elasticsearch/elasticsearch.ymlFind the line that specifies network.host and uncomment it so it looks like this:

network.bind_host: 192.168.122.1 network.host: 192.168.122.1

Now start Elasticsearch:

service elasticsearch restart

/sbin/chkconfig --add elasticsearchNow that Elasticsearch is up and running, let’s install Kibana.

[root@base elasticsearch]# ps -ef | grep elastic elastic+ 14097 1 0 Aug08 ? 00:03:42 /bin/java -Xms256m -Xmx1g -Xss256k -Djava.awt.headless=true -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -XX:+HeapDumpOnOutOfMemoryError -XX:+DisableExplicitGC -Dfile.encoding=UTF-8 -Delasticsearch -Des.pidfile=/var/run/elasticsearch/elasticsearch.pid -Des.path.home=/usr/share/elasticsearch -cp :/usr/share/elasticsearch/lib/elasticsearch-1.4.4.jar:/usr/share/elasticsearch/lib/*:/usr/share/elasticsearch/lib/sigar/* -Des.default.config=/etc/elasticsearch/elasticsearch.yml -Des.default.path.home=/usr/share/elasticsearch -Des.default.path.logs=/var/log/elasticsearch -Des.default.path.data=/var/lib/elasticsearch -Des.default.path.work=/tmp/elasticsearch -Des.default.path.conf=/etc/elasticsearch org.elasticsearch.bootstrap.Elasticsearch root 24969 6488 0 03:26 pts/1 00:00:00 grep --color=auto elastic [root@base elasticsearch]#

Install Kibana

Download Kibana to your home directory with the following command:

cd /opt; curl -O https://download.elasticsearch.org/kibana/kibana/kibana-4.0.1-linux-x64.tar.gzExtract Kibana archive with tar & Open the Kibana configuration file for editing:

[root@base kibana]# cat /opt/kibana/config/kibana.yml | grep -v "^#" | grep -v "^$" port: 5601 host: "base.vashist.com" elasticsearch_url: "http://base.vashist.com:9200" elasticsearch_preserve_host: true kibana_index: ".kibana" default_app_id: "discover" request_timeout: 300000 shard_timeout: 0 verify_ssl: true bundled_plugin_ids: - plugins/dashboard/index - plugins/discover/index - plugins/doc/index - plugins/kibana/index - plugins/markdown_vis/index - plugins/metric_vis/index - plugins/settings/index - plugins/table_vis/index - plugins/vis_types/index - plugins/visualize/index [root@base kibana]#

In the Kibana configuration file, find the line that specifies the elasticsearch server URL, and replace the port number (9200 by default) with 80 & on port 80 other web services are running at moment, hence We will be using Nginx to serve our Kibana.

Install Nginx:

yum -y install nginxCreate a new Kibana conf

[root@base ~]# cat /etc/nginx/conf.d/kibana.conf

server {

listen 8080;

server_name base.vashist.com;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/htpasswd.users;

location / {

proxy_pass http://base.vashist.com:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

[root@base ~]#

Now restart Nginx & Kibana4 to put our changes into effect:

[root@base ~]# systemctl status nginx.service ● nginx.service - The nginx HTTP and reverse proxy server Loaded: loaded (/usr/lib/systemd/system/nginx.service; enabled; vendor preset: disabled) Active: active (running) since Sun 2015-08-02 23:27:18 IST; 6 days ago Main PID: 1250 (nginx) CGroup: /system.slice/nginx.service ├─1250 nginx: master process /usr/sbin/nginx ├─1251 nginx: worker process Aug 02 23:27:18 base.vashist.com systemd[1]: Started The nginx HTTP and reverse proxy server. [root@base ~]#

[root@base kibana]# systemctl status kibana4.service

● kibana4.service

Loaded: loaded (/etc/systemd/system/kibana4.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2015-08-02 23:26:55 IST; 6 days ago

Main PID: 820 (node)

CGroup: /system.slice/kibana4.service

└─820 /opt/kibana/bin/../node/bin/node /opt/kibana/bin/../src/bin/kibana.js

Aug 09 02:53:28 base.vashist.com kibana4[820]: {"@timestamp":"2015-08-08T21:23:28.158Z","level":"info","message":"POST /_msearch?timeout=0...0928791"

[root@base kibana]#

Install Logstash

cd /opt; curl -O https://www.elastic.co/downloads/logstash/logstash-1.5.2.tar.gz

Extract Logstash archive with tar & setup the configuration as per data sets:

Choosing a dataset

The first thing you need is some data which you want to analyze. As an example, I have used historical data of the Apple stock, which you can download from Yahoo’s historical stock database. Below you can see an excerpt of the raw data.

[avashist@base conf.d]$ tail -f /home/avashist/Downloads/yahoo_stock.csv 1980-12-26,35.500082,35.624961,35.500082,35.500082,13893600,0.541092 1980-12-24,32.50016,32.625039,32.50016,32.50016,12000800,0.495367 1980-12-23,30.875039,30.999918,30.875039,30.875039,11737600,0.470597 1980-12-22,29.625121,29.75,29.625121,29.625121,9340800,0.451546 1980-12-19,28.249759,28.375199,28.249759,28.249759,12157600,0.430582 1980-12-18,26.625201,26.75008,26.625201,26.625201,18362400,0.405821 1980-12-17,25.874799,26.00024,25.874799,25.874799,21610400,0.394383

Insert the data

Now we have to stream data from the csv source file into the database. With Logstash, we can also manipulate and clean the data on the fly. I am using a csv file in this example, but Logstash can deal with other input types as well.

Now create a new logstach conf file:

[avashist@base ~]$ vi /opt/logstash-1.5.2/conf.d/stock_yahoo.conf

input {

file {

path => "/home/avashist/Downloads/yahoo_stock.csv"

start_position => "beginning"

}

}

Explanation:

With the input section of the configuration file, we are telling logstash to take the csv file as a datasource and start reading data at the beginning of the file.

Now as we have logstash reading the file, Logstash needs to know what to do with the data. Therefore, we are configuring the csv filter.

filter {

csv {

separator => ","

columns => ["Date","Open","High","Low","Close","Volume","Adj Close"]

}

mutate {convert => ["High", "float"]}

mutate {convert => ["Open", "float"]}

mutate {convert => ["Low", "float"]}

mutate {convert => ["Close", "float"]}

mutate {convert => ["Volume", "float"]}

}

Explanation:

The filter section is used to tell Logstash in which data format our dataset is present (in this case csv). We also give the names of the columns we want to keep in the output. We then converting all the fields containing numbers to float, so that Kibana knows how to deal with them.

The last thing is to tell Logstash where to stream the data. As we want to stream it directly to Elasticsearch, we are using the Elasticsearch output. You can also give multiple output adapters for streaming to different outputs. In this case, I have added the stdout output for seeing the output in the console. It is important to specify an index name for Elasticsearch. This index will be used later for configuring Kibana to visualize the dataset. Below, you can see the output section of our logstash.conf file.

output {

elasticsearch {

host => "base.vashist.com"

index => "stock_indx"

action => "index"

workers => 1

}

stdout {}

}

[avashist@base ~]$

Explanation:

The output section is used to stream the input data to Elasticsearch. You also have to specify the name of the index which you want to use for the dataset.

The final step for inserting the data is to run logstash with the configuration file:

[root@base bin]# ./logstash -f ../conf.d/stock_yahoo.conf Aug 09, 2015 4:30:16 AM org.elasticsearch.node.internal.InternalNode <init> INFO: [logstash-base.vashist.com-26823-13458] version[1.5.1], pid[26823], build[5e38401/2015-04-09T13:41:35Z] INFO: [logstash-base.vashist.com-26823-13458] started Logstash startup completed

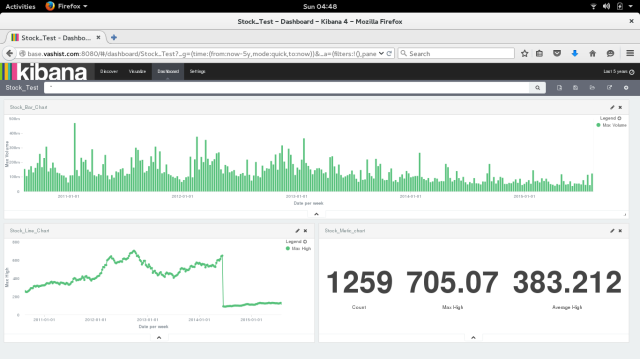

Connect to Kibana

When you are finished setting up Logstash Forwarder on all of the servers that you want to gather logs for, let’s look at Kibana, the web interface that we installed earlier.

In a web browser, go to the FQDN or public IP address of your Logstash Server. You should see a Kibana welcome page.

Click on Logstash Dashboard to go to the premade dashboard. You should see a histogram with log events, with log messages below (if you don’t see any events or messages, one of your four Logstash components is not configured properly).

Here, you can search and browse through your logs. You can also customize your dashboard. This is a sample of what your Kibana instance might look like:

GitHub Link : https://github.com/amitvashist7/ELK

Happy Learning 🙂 🙂

Cheers!!

Pingback: What is ELK ?? | Share our secret